A few weeks ago I was researching an entity and wanted to be completely hands-off with my recon methods and see what I could still obtain. The answer was: a lot. A heck of a lot, some of it incredibly valuable from an attacker’s perspective. I had also had in the back of my mind an intent to come up with some interesting workshops that my good friends in the Bournemouth Uni Computing and Security Society could have fun with. From these seeds, an idea was born: challenge people to find as much as possible using these methods, awarding points based on a finding’s value from the perspective of an attacker. Although it’s still very much a work in progress, I thought it might be worth sketching out my idea for people to try it, and get feedback.

This is something I hope can be of use to many people – the relevance to the pentesting community is pretty clear, but I also want to encourage my homies on the blue team to try this. If you use these techniques against your own organisation it has the potential to be of massive benefit.

Preparation

Select a target entity with an internet presence. The game’s organisers should research this entity themselves first, both to get an idea of the potential answers that competitors might give and assist scoring, and possibly to produce a map of known systems so that the no-contact rule can be enforced.

Set up an environment suitable for monitoring the competitors’ traffic and enforcing the rule. This might consist of an access point with some firewall logging to identify anyone connecting to the target’s systems directly, but organisers can choose any other method they deem suitable. If you trust participants to respect the rules enough, you could just decide not to do this part. It’s probably a good idea to at least have a quiet place with decent internet where you won’t be disturbed for the duration, and enough space for all the people taking part.

I would suggest that all participants should have a Shodan account. For students, good news – free! (at least in the US and UK, I don’t know about other locations)

Other tools that competitors should probably brush up on are whois, dig, and of course google your favourite search engine.

Rules

Competitors have a fixed time period to discover and record information relevant to an attacker who might wish to break in to the target’s network. Points will be scored for each piece of information based on how useful it is to this task. The time period can be decided by the organisers, but I think between one and two hours is a sensible period. The highest score achieved in the time period wins.

At the end of the period, competitors must provide a list of all their discoveries. The list must include a description of how each was found or a link to the source, sufficient for the judges to validate the finding’s accuracy. If there is insufficient information to verifiy a discovery, it shall receive no points.

No active connection to the target’s networks is permitted; this includes the use of VPNs and open proxies. Making active connections will be penalised, either by a points deduction or disqualification, at the judges’ discretion.

Points

| Item | Score |

|---|---|

| Domains other than the target’s primary domain | 1pt each |

| * if domain can be confirmed as internal AD domain | 3pts each |

| Subsidiaries (wholly or majority owned) | 1pt each |

| Joint ventures | 2pts each |

| Public FQDNs/hostnames | 1pt each |

| Internet exposed services | |

| * per unique protocol presented externally | 1pt each |

| * per unique server software identified | 1pt each |

| * per framework or application running on server (e.g. presence of struts, coldfusion, weblogic, wordpress) | 3pts each |

| * each version with known vulnerability | |

| * RCE | 10pts each |

| * other vulns | 3pts each |

| ASNs | 1pt each |

| Format of employees’ emails | 3pts |

| Internal hostname convention | 5pts |

| Internal hostnames | 2pts each |

| Internal username convention | 5 pts |

| Internal usernames | 2pts each |

| Configuration fails (e.g. exposed RDP/SMB/SQL/Elastic/AWS bucket) | 5pts each |

| Individual confidential documents on internet | 3pts each |

| Large archive or collection of confidential documents on internet | 8pts each |

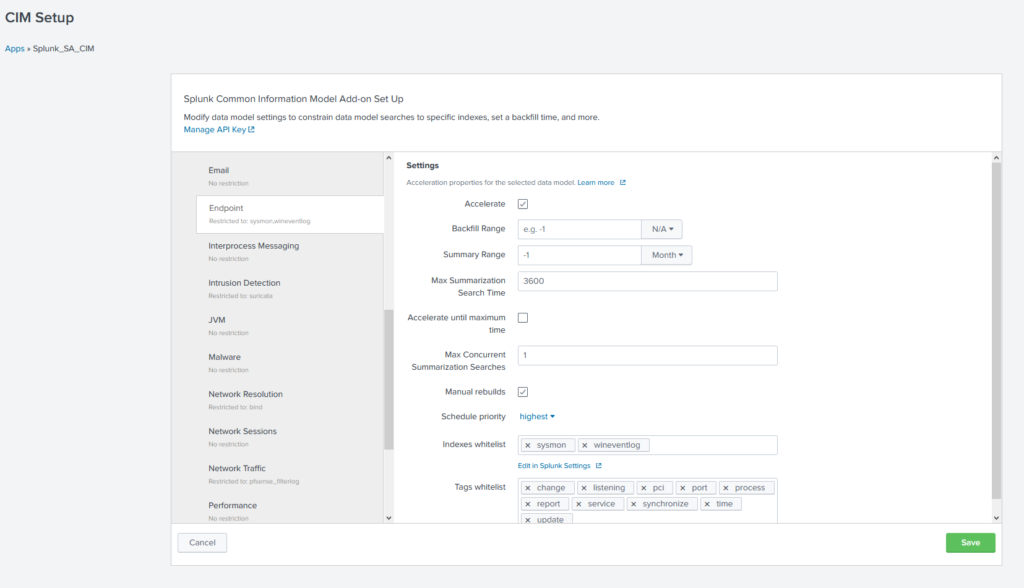

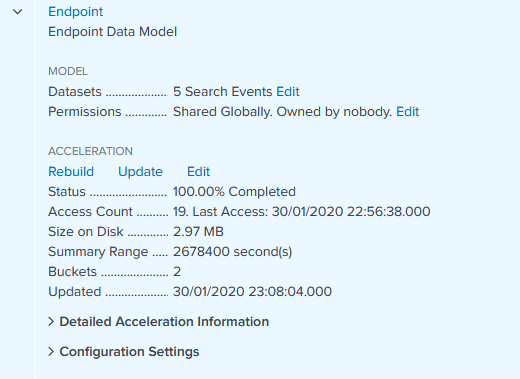

| Vendor/product name of internal products relevant to security/intrusion (e.g. AV/proxy/reverse proxy/IDS/WAF in use) | 4pts each |

Any items discovered that apply to a subsidiary or joint venture are valid and to be included in the score.

A little help?

I’m intending to playtest this as soon as possible, but I would absolutely love to get feedback, regardless of whether I’ve already tested it. Please comment or tweet at me (@http_error_418) with suggestions for how to improve it, more items that can be scored, criticism of points balance… anything!